LangChain is AMAZING | Quick Python Tutorial

I4mFqyqFkxg — Published on YouTube channel ArjanCodes on August 11, 2023, 3:00 PM

Watch VideoSummary

This summary is generated by AI and may contain inaccuracies.

- Ariane gives a talk about LangChain, a library for creating applications that communicate with large language model APIs. She introduces how to get an API key and how to create a chat model. - Speaker A shows how to send a request to an LLM and get back the result as formatted structured data. Then they can do whatever they want with it in their code. This could be an interesting way of quickly developing a compatibility layer around an old API. - LangChain's documentation page is quite extensive in what it can do. It defines a common group of concepts and interactions between these concepts. It also combines different ideas from different design patterns.

Video Description

👷 Review code better and faster with my 3-Factor Framework: https://arjan.codes/diagnosis.

LangChain is a great Python library for creating applications that communicate with Large Language Model (LLM) APIs. In this tutorial, I’ll show you how it works and also discuss its design.

🐱💻 Git repository: https://git.arjan.codes/2023/langchain

🎓 ArjanCodes Courses: https://www.arjancodes.com/courses/

🔖 Chapters:

0:00 Intro

0:42 LangChain Tutorial

12:23 LangChain’s design

15:58 Final Thoughts

#arjancodes #softwaredesign #python

Transcription

This video transcription is generated by AI and may contain inaccuracies.

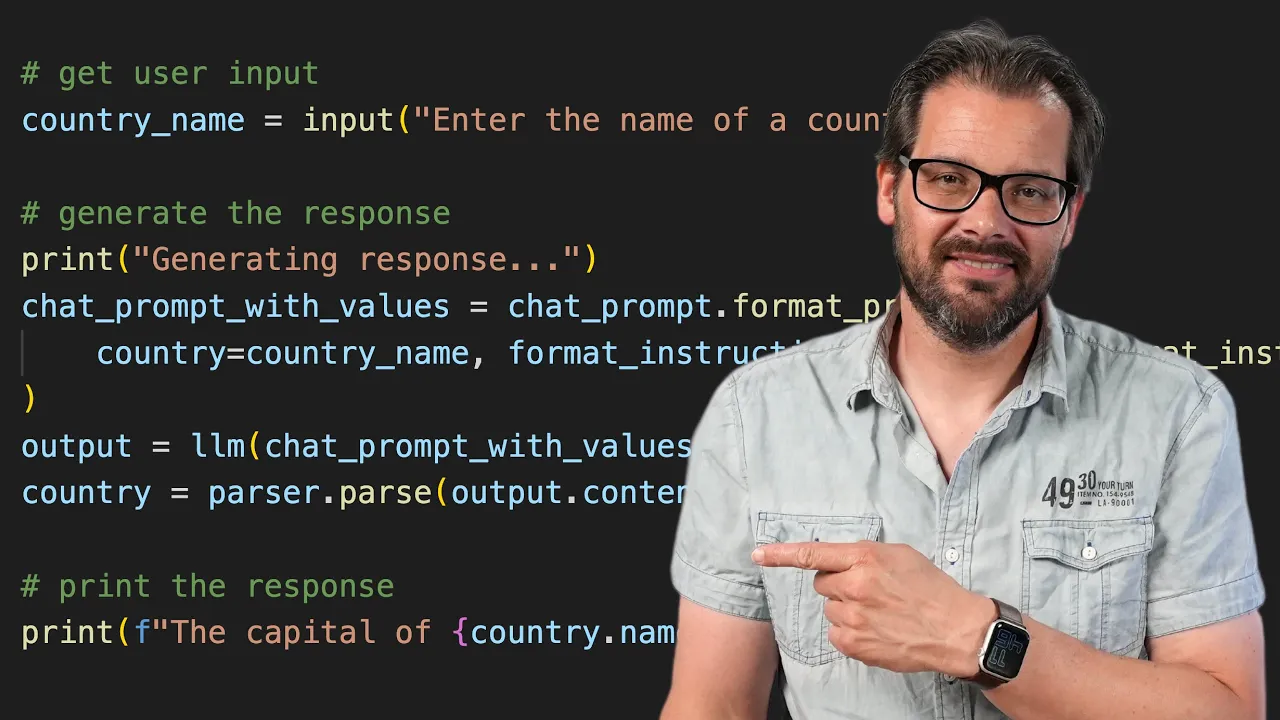

LangChain is a great library for creating applications that communicate with large language model APIs. You did mention in a survey that I recently posted that you want me to do more AI focused content. Of course I do my best to listen to you, so here you go. I'll show you today what you can do with LangChain and it's pretty cool. But I wouldn't be Ariane if I didn't also talk about how it's been designed, and we can learn an important lesson from that. By the way, if you're enjoying these types of videos, you surely also like my discord server. It's a great, really helpful community with a ton of knowledgeable people. You can join for free using this link. And when you join, say hi. I always wave back. Getting started with LangChain is actually really easy. You just create a large language model object and then you can call methods on that. For example, send the prompt and then get a result as a starting point. I have a mostly empty script here which just loads the OpenAI API key from a n file. And of course, if you want to interact with a large language model like the one from OpenAI, you're going to need an API key. It's really easy to get an API key. You just have to create an account at OpenAI or any of the other competitors and then you're going to have a possibility there in the web based interface to get an API key. Note though, that as you're using this key more and more, obviously it's going to cost some money because you're going to be charged for the tokens that you're going to request from the surface. So the first step is create this LLM large language model object. And in order to do that for OpenAI, we simply have to import it from LangChain. So from the LLMs we're going to import OpenAI, and then in the main function we can create the LLM, which is going to be OpenAI, and we're going to pass it the OpenAI key. And just to make sure that we actually pass it to the right argument, I'm going to supply the keyword as well. And now we can simply send a prompt to this large language model and then let's also print the results like so. So this is all it takes. So when I run this, then this is what we're going to get as a result. So here we have five really great ideas for YouTube videos about Python. So now you know what I'm going to do for the next couple of weeks. Now, this doesn't actually work with the latest GPT model from OpenAI. This works with an older model. If you want to use one of the newer models like GPT 3.5 or GPT four, then you're going to need to use a so called chat model, which has a slightly different interface in lancing. In this case, what we're going to need is from LangChain chat models, we're going to import chat OpenAI, which is the OpenAI chat model interface, and then we're not going to use this. But now we're going to create a chat OpenAI model and obviously we're going to set the API key. But what we can now also do is let me define a model which is going to be, let's say GPT 3.5 turbo. And now next to the OpenAI API key, we can also supply the model name, like so. Here you see an example of what that generates. So we still get five video suggestions, but there's more detail. Well, this is also GPT 3.5, so it's going to give more detail than some of the older models. But if I want to change this now, I can, for example, also supply GPT four and that's actually really easy. And now let me run this one more time. And GPT four is obviously going to be a bit slower. And now this is what we get. So GPT four didn't give us any extra information about what each video is about. But still, if I look at the topic suggestions, well, I think these are slightly nicer than the ones that GPT 3.5 has proposed. Another really powerful thing you can do with LangChain is work with templates. For example, here I've created a prompt country info, that's simply a phrase, provide information about country. And it's template where we can insert the name of the country. As a first step, I asked the user for a country name and then we need to do is create a list of messages that we're going to send to the chatbot. So if you work with templates, you have to use the message system of LangChain, which is slightly more complicated than just calling predict, but it also gives you a lot more flexibility. So what we're going to do in order to use these templates is add a couple of imports. So we're going to need two things, which is the human message prompt templates. There are different types of templates that LangChain supports, including human messages and system messages. And I'm also going to need the chat prompt template class. And the first thing that we're going to do is now that we have the name of the country, the next step is that we now create the human prompt message from a template and there we're going to supply the prompt with the country information. Next we're going to generate the chat prompt for which we'll use the chat prompt template. And by the way, GitHub copilot is generating some stuff here that's actually not correct. So I'm just going to ignore that. So this is going to need from messages and we're going to supply the messages like. So the third step is to actually provide the data that we asked here from the user. So by the way, the reason that I'm using a chat prompt here is that we can now have multiple messages and then you can now format these messages all at once by doing a single call. So let's say we have a chat prompt with values and that's a chat prompt, and then we're going to call format prompt, and there we're going to supply the country. Now we can generate a response which is LLM and we're going to pass the chat prompt with values to messages. So that gives the list of messages to send to the LLM. And finally we can print the response. So what did we do? We created the prompt object using humanmessage prompt template. We then created a chat prompt which is a sort of list of messages. We then called format prompt so that we could format these different prompts and then we convert it again into messages that the LLM can deal with. And let's run this. For example, we want some information about the Netherlands because, well, that's where I live. And obviously this takes a bit of time because we're waiting for the response from, from the LLM. And there we go. This is the response that we actually get. So as you can see, there's an object called content that actually contains the response from the model. Now this is raw text, so that's not really something we can actually do something with in our code directly. But there's also a way with LangChain to actually supply output formatting instructions to the models. For example, you could instruct a large language model to return a response as JSON data, which is pretty helpful because then you can actually parse that and use it in your own code. Let me show you an example of how to do that by combining LangChain with pedantic. The first thing that I'm going to do is create a pedantic model. And for that, of course, we need a place model and we're also going to need a field. So let's say we just want to get the capital of the country. So here I've created a country model with pedantics based model that has capital, which is a string field, and we have a name which is the name of the country. I've also supplied a description to each of these fields because that gives a bit of context to the LLM. And then in order to actually create formatted responses according to this model, what we need now is from LangChain output parsers. There's many different output parsers that are available, but we're going to use the pedantic output parser. And then it's actually really simple to set this up. So next to the LLM, what we're also going to need is a parser, which is going to be a pydantic output parser, and we're going to supply it a pedantic object. Let me just add the keyword argument here so that it's clear. And what we then need to do is a simple extension of this prompt. And what we're going to do is we're going to add format instructions, because of course we have to tell the LLM now in what format it is supposed to return the result. And then let me scroll down. So here we have our format prompt. So we not just need to supply the country, but we also need to supply the format instructions. And what's nice about the parser, the output parser, is that it can actually get the format instructions for us from the podium model, which is really awesome. So then this is what we have. So we're formatting the prompt with this information. So now that we have the response back, we can use the parser to parse the content of the response. And then let's simply print the data for the time being. And now when we run this, let's use another country, Belgium. Why not? And you see, we get now an object with a capital, Brussels, and a name, Belgium. And what's really cool is that this thing is now of type country. So for example, I can do data capital. And then let's run this again. Let's use another country, Germany. And then it's going to give us simply the capital of Germany. And since we now have access to this formatted response data, we can do whatever we want with it in our code. For example, here I'm printing it as an f string or maybe you want to show it somewhere in user interface. So let's run this one more time and then you see that this actually works perfectly. Let's use a fake country and see what the capital is of Zuckerberg, huh? Ooh, that doesn't exist. Okay, that's a pity. I thought it was a real country. Let's instruct the LM that it can also make up stuff. There we go. Because I really want to know what the capital is of Zuckerberg Mercville. Okay, then I could have expected that. So what I've showed you now is how to send a request to an LLM and get back the result as formatted structured data. You can also do it the other way around in that you can ask the LLM to call an API for you and then return the result of that. And that's actually also relatively easy as long as you supply the documentation of the API to the chat model, because otherwise it doesn't know how to call it. So Blankchain has an example for openmetio, and what happens here is this, almost the same setup as had before. So we create the LLM, then we create an API chain, and that's basically what you need to do if you want to supply API information to the chat model. So it can call it, and then you can run your prompt by using that API chain and then print results. When I run this example, this is the result I get 18.3 degrees in Amsterdam. And of course you can combine these things. You can add another parser, an output parser to this, so you get back formatted data. This, by the way, could be an interesting way of quickly developing a compatibility layer around an old API. So you could just supply the old documentation to chat GPT and then let it generate the API response using the new specification that you need while you refactor your old API code. It is a bit slow and it's probably also a bit expensive, especially if you have lots of users that use this. So I would probably not redo that, but it could be a potential application of this. So what's the kind of thing that you are thinking about doing with LangChain in your projects? Do you think there are any missing features that you'd really like to have? Let me know in the comments. This, by the way, is LangChain's documentation page. As you can see, it's quite extensive in what it can do. So you have several different modules. And what's really nice about LangChain is that it defines a common group of concepts and interactions between these concepts. So for example, if you look at the model input output model IO, you see that it has prompts, which in turn has prompt templates with lots of different options. And we have language models which are of course the different LLMs and chat models that you can use with LangChain. And like I showed you before, we have output parsers. So you can parse datetimes list JSON pedantic. You can do other things here as well. So there's lots and lots of possibilities. And as you can also clearly see that basically for each concept there is a sort of hierarchy of possible implementations. So we have a common thing called an output parser, but then you have specific implementations like the pedantic output parser or the daytime parser, and same for the chat models or for the LLM. So you have like a common structure called an LLM, and then you have subclasses that implement the functionality so that you can connect with that particular large language model. And in that sense, when you think about the design of LangChain, it actually combines different ideas from different design patterns. One design pattern that comes to mind is the strategy pattern, which allows us to replace an algorithm with a specific implementation. So when you look at output parses, for example, that's something that really is close to that kind of pattern. But similarly, you can also recognize aspects of the bridge design pattern, which is a pattern that has multiple hierarchies. Normally you have two in the standard bridge pattern, but these hierarchies, they both have an abstraction that connect it on the abstract level. By the way, I did a video about the bridge already a while ago. Humor said, I've put a link here at the top, but I the idea of the bridge is that you can then vary these different hierarchies without affecting the other hierarchies. And that's also something that you can see here. For example, I could write an output parser that generates a tree like structure, but I can do that without having to do any work on the large language model side. And similarly, I can add compatibility to another large language model without having to change anything in the output parser. So that's clearly a feature of the bridge pattern. Another thing you also recognize is ideas from patterns like template methods, which provide specific implementations, and then you call methods on it in a particular structure. And that's also sort of what happening here. So we create like standard objects like LLMs and output parsers, prompt templates, and then we run them through a particular sequence which is the template, and that uses those objects, calls methods on them, and then returns the result. So there's quite a few of these interesting patterns that you see in lang chain. Even though it doesn't directly like hard coded implements, the bridge pattern. It doesn't call something a bridge or something or a strategy. It simply uses the ideas from those patterns to create a library that's well designed. And by the way, if you'd like to become better at detecting these kinds of patterns in code yourself, then you should definitely check out my free workshop on codiagnosis. You can join via this link below. It's really practical. I show you a three factor framework to help you review code, look at code more efficiently while still finding the problems and detecting the patterns fast. So again, it's totally free. You can sign up at arm code diagnosis. It contains tons of practical advice, about half an hour. I'm sure you're going to enjoy it a lot. So what design lessons can we learn from lancing? Well, one really important lesson in my opinion, is that Langchen shows how important it is to define the concepts well. If you define the concepts wrong, then your library is not going to be designed well. So we have very clear concepts like prompts, language models, output parsers, etcetera, etcetera. And it really shows that if you define these things well, they connect well. It works well, it's easy to use. I still think it would be nice if lancing took a next step, and also let us abstract away a bit more from whether something is an LLM or a chat model. I don't think a lot of people really care about that, and the way that we interact with it is mostly the same. So I think that would be a nice next step, but really shows that it pays off if you spend some time really thinking about the concepts and how they are related. Another really important lesson is that even though we have all these design patterns, it's not that important that you follow them strictly the letter. It's way more important to think about the principles that are behind the patterns and apply those principles judiciously. Principles like coupling, cohesion, making sure you split, creating a resource from using a resource, keeping things simple, and so on. By focusing on the principles instead of the patterns, you're going to design great code. You can use newer features from a language that wasn't supported maybe by the older design patterns overall, it's going to lead you to a great design. One example of set of principles is called grasp, especially if you work with Python a lot. I think this is a great starting point. To learn more about that, watch this video next. Thanks for watching and see you next time.